Rocket Insights recently completed a project with one of the largest affiliate marketing consulting firms in the US which turned out to be a great success for everyone involved. We asked one of the lead consultants on the project, Jason Lombardo, to answer a few questions which we hope will demonstrate the vast territory that is covered by a modern Data Architect / Engineer and how it all eventually comes together into a simple and friendly solution for the end users.

How did this project start out?

Jason: When I first joined the project we knew the primary intermediary of the click-stream data that the client had been using to date was stepping out of that business so we needed to become self-sufficient in dealing with the data from its various sources directly. This also meant we would now be fully responsible for all of the metrics and reports as well. Essentially, we needed a full analytics stack from scratch and we had only a few months to deliver it.

The first thing I did when I joined the team was try to work backwards from the end-user experience to identify where we could simplify and potentially save time given our fairly lean team. The first area I observed was the reporting and the initial plan to build a configurable report designer and viewer in React/JavaScript. While it may have yielded the most customizable branding and possibly endless visuals, there is a great deal of administration and automation that comes with modern Business Intelligence (BI) platforms that, while it’s not the sexy stuff on the front-end, it is essential functionality for any enterprise. For example, having multiple stages of publishing and review before sharing out reports broadly, having security-trimmed access to datasets and calculations, and having automated data refresh are just a few of the back-end features that keep a professional BI operation running smoothly.

So, rather than reinvent, we pivoted to performing a vendor assessment of the market-leading data modeling and visualization platforms, which included Power BI, Qlik and Tableau. We assisted in the calculation of the overall total cost of ownership for the embedded BI components, built demonstration models and reports based on real click-stream data as a proof of concept and finally presented our findings to the key project stakeholders to secure our direction moving forward. In the end, we decided to use Power BI as our Business Intelligence platform for the analytical layer and the reports and dashboards.

Now that you had an end-user experience in mind, where did you go next?

Jason: Well, next we turned to the design of the data warehouse itself. We knew there were existing streams of data we needed to work with so we analyzed the data to understand what it looked like coming from its source. We then collaborated with subject matter experts at our client to define an ideal business-level target data model for their analysis and reporting. We leveraged data warehouse architecture best practices to map the target data model to a data warehouse star schema design pattern for efficiency, scale and performance.

So now you have your front-end and your back-end. How do you glue them together?

Jason: With some good old fashioned SQL and some AWS Glue, of course. To bring our data architecture to life we used SQL scripts to create all of the tables, relationships, constraints, indexes, and views needed in the data warehouse. For our data warehouse, we used AWS RedShift which is built on PostgreSQL. We then used some more SQL to populate 85 years worth of temporal metadata so we could perform complex time-series and time-intelligence analytics. And finally, we used a bit more SQL to create all of the tables that we needed for our Master Data Management (MDM) relational database, for which we used PostgreSQL in AWS Relational Database Service (RDS).

Okay, so databases are now built. How do you get the data in and out?

Jason: That’s where AWS Glue comes in which is an Extract Transform Load (ETL) platform built on top of Apache Spark. Glue uses Python, so we created our own amazing Python library to abstract away the complexities of AWS Glue and Spark so we could be more efficient and promote consistency across all of our ETL scripts. Most of our scripts ended up reading like plain English which worked well for troubleshooting and as self-documenting code.

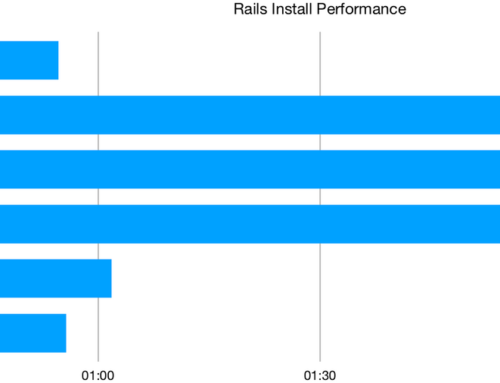

We designed ETL processes for each of the data sources diagramming the transformations and the expected schema at each step through the process. One of them ended up looking like this:

OMG, that is both beautiful and terrifying how complex that looks. Do you really understand that?

Jason: Yes, I made it. Now we had a fully-working end-to-end data pipeline from source to target all modeled out the way the business wanted it. Then we needed to manage that data and keep it tidy. For that we built a layer of web services and an administrative user interface (UI) on top of the Master Data Management database we built earlier so Data Analysts at our client could edit the values of their various dimensions and have those dimensions reflected in their downstream reports and dashboards. For the administrator portal we used Node.js and TypeScript for the web services and Sequelize for data access.

All that is left is the reports themselves, right?

Jason: I wish. First we needed to do some heavy math. It turns out that counting the clicks themselves isn’t enough. But there is a whole field of statistics built around clicks which I won’t begin to explain because I still barely understand it myself. Luckily, I knew how to look up the formulas in Power BI and the subject matter experts at our client knew how to use them to build the metrics we needed for our reports and visualizations. For this step, the Data Analysts at our client who understand all of the math are the real heroes.

We just helped them make the connection between Power BI and the data warehouse in AWS so we could bring the data into Power BI’s in-memory analytics engine. On top of that we helped build their semantic business data model and published their first certified dataset to the Power BI cloud service to be leveraged by the hundreds of custom client reports that would be built subsequently.

It’s clear from talking to Jason that building a full analytics stack from the ground up is no mean feat. It’s a potential minefield of complicated nuances and tools. But we, for one, admire how a data architect like Jason is able to pull everything together to create a seamless, seemingly simple, finished product.

Questions? Find Jason Lombardo and myself, Matt Merrill, on LinkedIn.

Leave A Comment